What is an AI Agent? A Simple Introduction

This is the first post of the Xpress AI and Agents Advent Calendar 2024 series. This series of blog posts highlights various parts of the Xpress AI ecosystem and community. Feel free to claim an empty date and write something to help the community.

Myself and the team behind Xpress AI are no strangers to Agents. We got our start implementing reinforcement learning algorithms such as DQN and A3C, and putting them to work in robots that could use elevators by themselves. The capabilities of Large Language Models (LLMs) has caused a resurgence of interest in agents due to multi-modal models now also having the ability to see. Putting LLMs to use in automating work has been dubbed digital co-workers by Google and others. Unfortunately LLMs fall short of the capabilities needed to really work alongside humans in a company. Cracking this problem would result in billions of dollars of value being unlocked. In this post, I’ll show how our approach to agents actually looks backwards to older technology that creates AI systems capable of being more than just glorified chatbots.

The Evolution of AI Agents

The idea of ‘Agents’ living inside a computer is an incredibly old one. Not just for robotics but even in personal computers. Even back in 1984, Steve Jobs talked about them in an interview in Access Magazine:

Well, the types of computers we have today are tools. They’re responders: you ask a computer to do something and it will do it. The next stage is going to be computers as “agents.” In other words, it will be as if there’s a little person inside that box who starts to anticipate what you want. Rather than help you, it will start to guide you through large amounts of information. It will almost be like you have a little friend inside that box. I think the computer as an agent will start to mature in the late ’80s, early ’90s.

Other than the reference to the 80’s and 90’s, a person could say the same thing today. It wasn’t some prediction in a vacuum. Agent Based Systems were the hot topic in AI back when Steve Jobs was talking about this. The concept was originally born from early AI researchers designing the systems necessary for robots to interact in the real world. They needed the robot to perceive the environment, reason about it, and take actions, which lead to the fundamental agent architecture that is often used in robotic systems such as self-driving cars.

The Promise and Limitations of LLM Agents

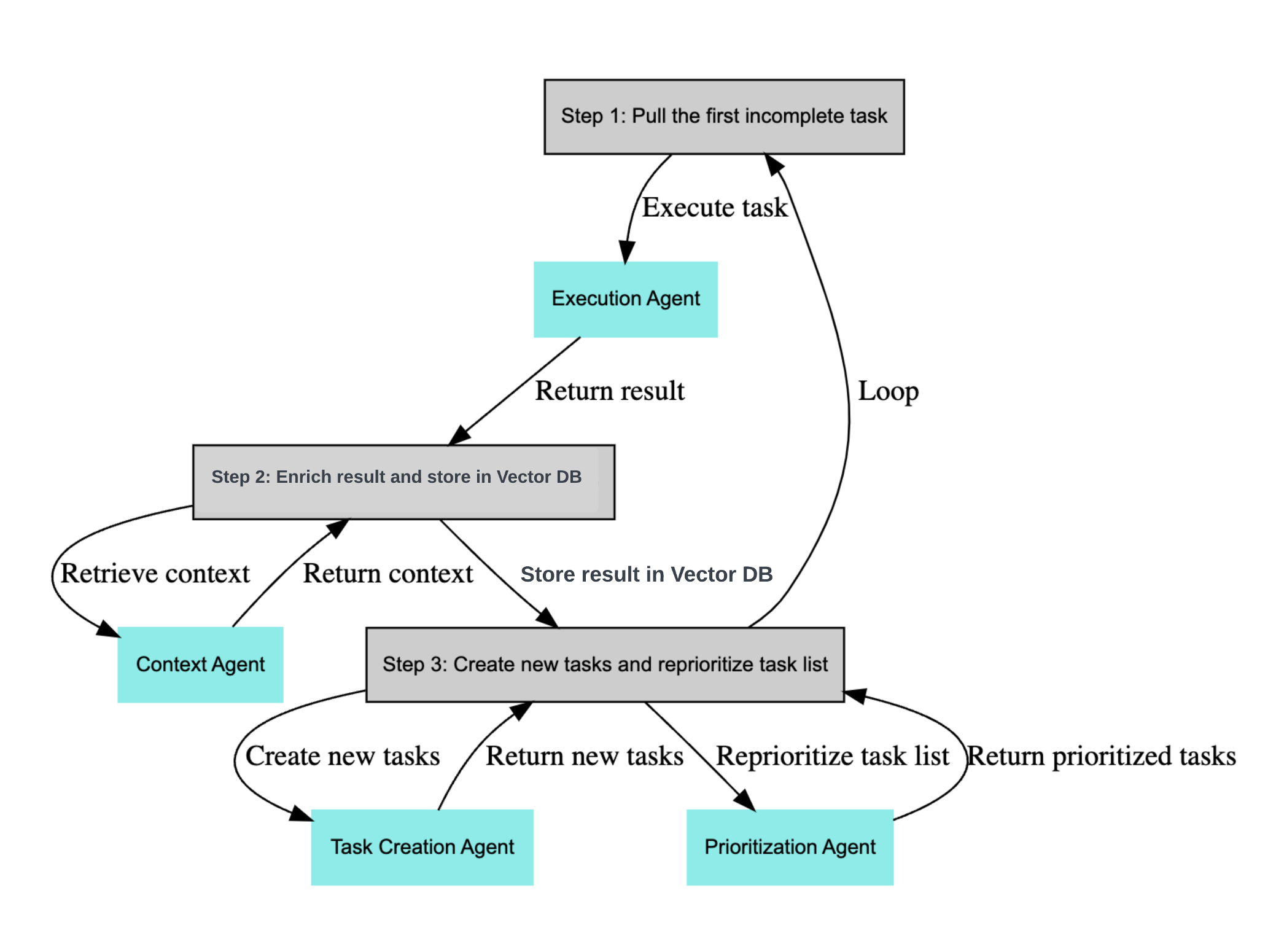

With the arrival of powerful language models, we saw a new wave of experimentation with AI agents. Projects like BabyAGI and AutoGPT captured people’s imagination by showing how LLMs could break down tasks and attempt to execute them. These systems seemed to provide glimpses of generally intelligent behavior, leading many to believe that with powerful enough LLMs, we could finally create the agents Steve Jobs envisioned.

However, when people started using these systems for real-world tasks, limitations became apparent. BabyAGI would create endless task lists, never truly finishing what it started. AutoGPT would often hallucinate actions and capabilities, struggling when reality didn’t match its increasingly generic and optimistic plans.

These limitations weren’t just about context window size. Even with models capable of processing 128,000 or even 1,000,000 tokens, the fundamental problems remained. The agents couldn’t effectively learn from their experiences, struggled to adapt to unexpected situations, and often failed to ground their actions in reality.

Making Agents Work

Our experience with reinforcement learning taught us something crucial: agents need to be grounded in reality and capable of adapting to feedback. This led us to rethink how AI agents should work:

First, we found that LLMs are much better at determining the next obvious action than at creating long-term plans. Instead of trying to plan everything upfront, effective agents should focus on making good decisions moment by moment, much like how reinforcement learning agents operate.

Second, memory needs to be active and relevant. Rather than drowning the agent in context, we need systems that can actively look up relevant information when needed, keeping the signal-to-noise ratio high.

Finally, agents need boundaries. In the real world, tasks don’t go on forever. By giving agents a maximum number of actions they can take to accomplish a goal, we ensure they either complete the task or gracefully handle failure - just like a human would.

These principles might seem simple, but they make the difference between agents that sound impressive but fail in practice, and agents that can actually get work done.

What’s Next?

In our next post, “Your First AI Agent with Xpress AI”, we’ll show you how to build these agents yourself. You’ll learn to set up the core architecture, define custom modules, connect to real-world environments, and build practical, useful agents that can make a real difference in your work.

The future of AI isn’t just about better models or simpler automation - it’s about creating genuine digital workers that can understand, learn, and act effectively in the real world. And with modern tools and frameworks, that future is more accessible than ever.