Even though most companies are keen to adopt AI in their operations, one of the common barriers is actually the cost-intensive data labelling process. DL projects especially rely on a huge dataset to obtain good performance. So how can we overcome this?

Possible situations:

- You have a labelled dataset with a huge number of samples - Thank God

- You have a labelled dataset with a small number of samples - Good luck

- You have none labelled dataset - Goodbye

If you’re in the second or third situation, read along. This might interest you.

Synthetic Dataset using Unity

I found an interesting package by Unity Technologies called Perception. The tutorial is extremely good for beginners in Unity like me. Do refer the tutorial, so that I can keep this post short and sweet.

I gave this approach a try, and yes it does work in a real-world application. For our project, one of the models required is the ID card detector. Here’s a summary of the experiment:

- Design ID card object in Blender

- Scan my own ID card (front and back) and import to the object as the material

- Import ID card object + material into Unity

- Follow the Perceptron tutorial step-by-step, replacing foreground objects with the ID card

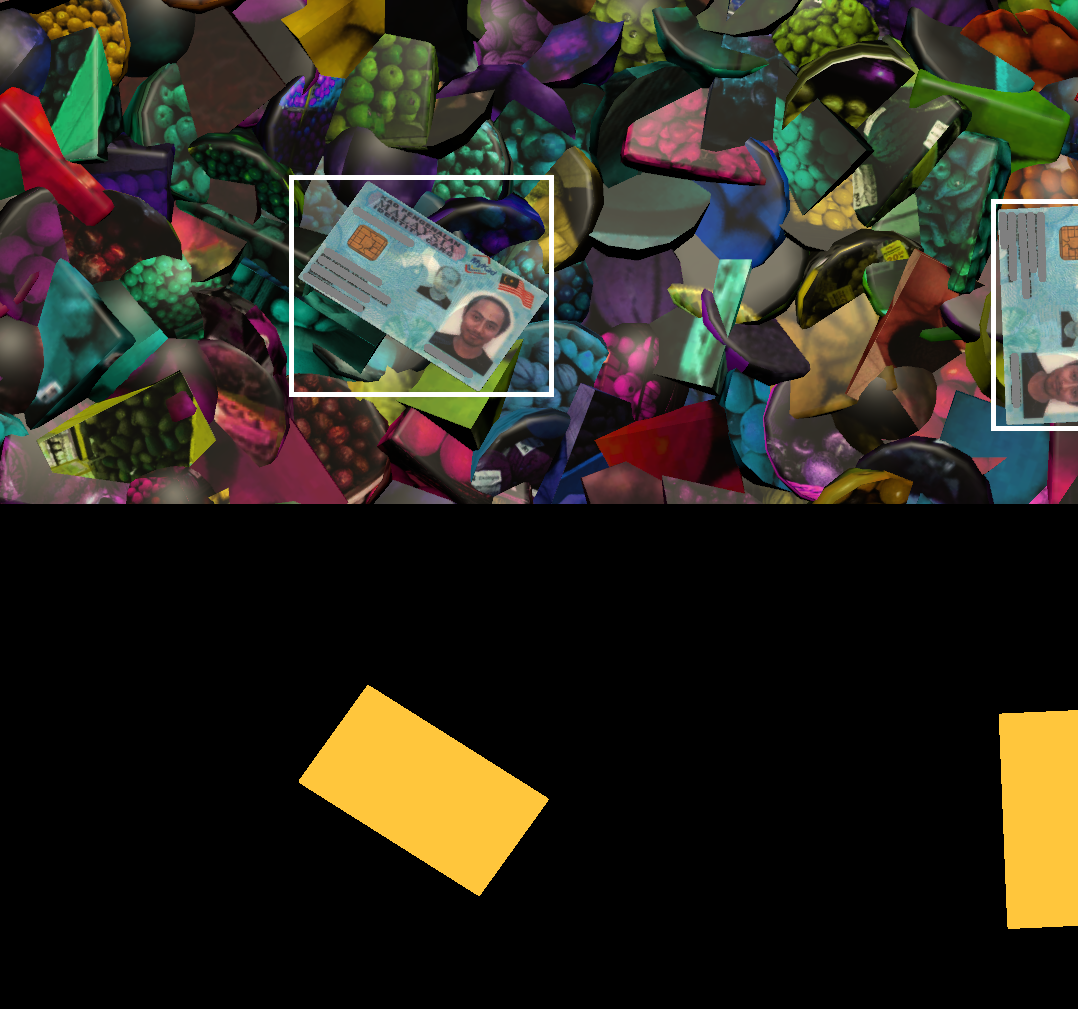

- The outputs will be JSON file of labels and snapshots of scenes with the ID card randomly positioned and oriented on a randomly textured and coloured background (shown in Figure 1)

- Train using YOLOv3 for object detection, U-Net for image segmentation and Resnet50 for Landmark Detection

- Test model on realtime webcam input

Verdict

- Definitely useful

- Easy to generate

- Applicable in most (if not all) application

I have read on how synthetic dataset is being used for training models such as using ship simulations in the development of the Autonomous Ship obstacle avoidance system.

I have also seen hobbyist train reinforcement learning for car navigation through grabbing screenshots in games as the dataset.

Personally, I think this might be a route worth exploring and expanding.