Recently, I’ve been getting into Kendo. There’s something incredibly satisfying about swinging a shinai around. Sure, it teaches you discipline and form, but let’s be honest - whacking people with a shinai is also a lot of fun. I’ve been lucky enough to train at one of Malaysia’s strongest (if not the strongest) dojos: Ai Kendo.

Training happens three times a week, but as a beginner without a bogu armor, the Sunday keiko practice that builds fundamentals are for me. The rest of the week, I’ve been given homework: practice suburi (repeated sword swings) at home. The goal is at least 100 swings a day. Early on (and even now), I often find myself wondering: Am I doing these swings right?

This isn’t just perfectionism speaking - in Kendo, proper form goes a long way. I was paranoid that I would reinforce bad habits that become harder to break later.

Of course, my sensei corrects me during keiko practice, but with only one session a week - and my time in Malaysia being limited - I was desperate to improve faster. That’s when I started thinking: maybe I could make something to help me out.

This sparked my pet project. My goal was to build a simple proof of concept (POC) to analyze Kendo movements using AI. It’s been an off-and-on effort (due to juggling with work and other commitments), but I finally got it to a functional state.

Disclaimer: This blogpost is written from the perspective of a beginner in Kendo. Always consult your local sensei for the best guidance and proper form instruction!

Pose Estimation: The Starting Point

My first idea was to use pose estimation. It’s lightweight, portable, and open source. The concept was simple: use Mediapipe Blazepose to triangulate the position of the thumb, middle finger, and pinkie to find the palm coordinate. Then, draw a line between the palms to simulate the shinai and calculate its angle.

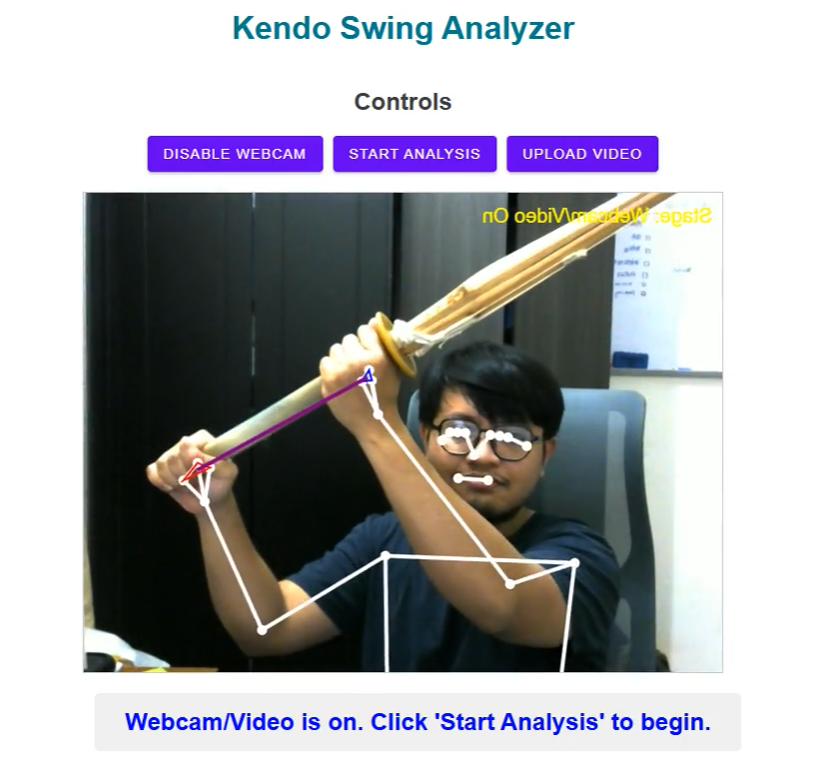

Here’s an early attempt using a selfie view:

Pretty cool, right? It was able to recognize key points which is the hands gripping the shinai, as well as the arms. The next step was to track the swings. From the starting kamae position (where the shinai points at the opponent’s throat) to the stopping point of a small men cut, I wanted to see if I could make it check if my form was correct. In first scenario (which was close-up like this) the results were decent, but when I tested it on a real video of me performing a cut…

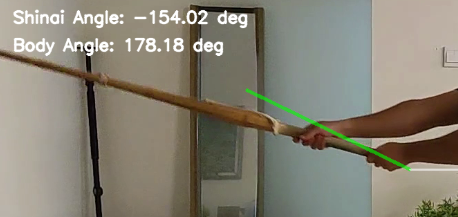

…it didn’t work as well. While it gave a rough idea of the movement, the calculated shinai angle was off, and the error became more obvious when projected across the shinai. I suspected the pose estimation model struggled mainly due to:

- Distance and resolution issues: The camera in a real setup is further away, making tracking less accurate.

- Obstructed hands: My camera isn’t the best, but the way the shinai is held or how it can hide one hand, throws off the model.

And of course, if someone’s wearing the bogu armor, this method breaks down completely. The gloves would obscure the hands, and the hakama hides the feet, making pose estimation unreliable. As much as I didn’t want to, I had to admit that pose estimation alone wasn’t enough.

Enter Instance Segmentation

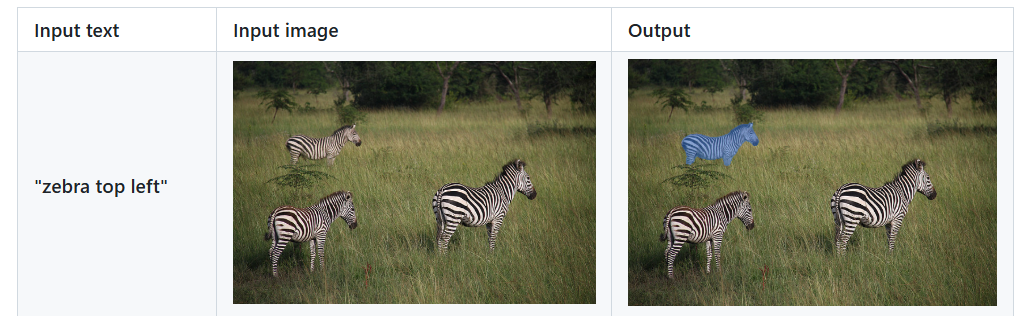

So, what’s next? I realized the shinai itself should be recognized. Projecting its position from the palms just wasn’t cutting it. I remembered an earlier project we worked on involving instance segmentation. Back then, we trained a custom Mask R-CNN model. But training from scratch felt like overkill for a quick POC, and most Kendo-specific segmentation datasets aren’t open source. That’s when (with a nudge from a senior), I discovered EVF-SAM2.

EVF-SAM2 is based on Meta’s Segment Anything Model (SAM) and can perform instance segmentation with just a prompt. No pretraining or datasets required. It works like magic: you describe what you want to segment, and it delivers high-quality results. Currently, it only works with English prompts, but by prompting it:

“A shinai (竹刀) is a Japanese sword typically made of bamboo used for practice and competition in kendō”

The model was able to recognize the shinai! It was then just the matter of hooking it up to my existing codebase. Instead of relying on hand positions, I used EVF-SAM2 to directly segment the shinai from the video frames. Here’s how it looks:

From the segmented mask, I computed the principal axis to determine the shinai’s angle. I was pretty happy with the results. The model consistently recognized the shinai in the most important frames (first and last), and calculating the angle became a straightforward process.

The Final Result

With both pose estimation and segmentation integrated, here’s what the final output looks like:

And for those who prefer raw data, here’s a snippet of the JSON output:

{

"initial_frame": 0,

"final_frame": 29,

"initial_shinai_angle": 68.5474508516025,

"final_shinai_angle": 68.35107667769117,

"initial_relative_angle": 30.892083265533472,

"final_relative_angle": -2.0885390189406365,

"cut_classification": "small_cut"

}Polishing it took more time than I anticipated, but this POC demonstrates something important: we can successfully track a shinai’s movement using a combination of pose estimation and instance segmentation. While the current implementation is basic, proving this concept opens up possibilities for more sophisticated Kendo analysis tools.

Currently, the system only works with side-view analysis, but with the core pipeline established, we can expand to:

- Multi-angle analysis: Adding front-view analysis for techniques like sayu-men (diagonal cuts)

- Pose-based corrections: Detecting common beginner mistakes like:

- Insufficient arm raising during strikes

- Inadequate pulling back of the shinai

- Improper foot positioning

- Practice tracking: Counting your daily suburi to help maintain practice consistency

- Form comparison: Using pose estimation to analyze differences between your form and reference movements from experienced senseis

While this tool might offer some interesting stats for experienced practitioners, it’s primarily designed with beginners in mind. The goal is to supplement (not replace) proper instruction from your senseis. It’s also worth noting that there are technically no “correct” forms - every style is unique!

What’s Next?

This is just the first part of a planned either two or three series blogposts. In the next blog post, I plan to integrate this pipeline with Xircuits new Gradio new component library for a frontend. And after that, I’m excited to explore creating a Kendo AI Agent. While building this project, I’ve been amazed (and slightly disturbed) by how powerful today’s LLMs are. I can’t wait to share my findings on that.

If you’d like to explore what I’ve built so far, I’ve open-sourced the code here: Project AI Kendo. If you think the project is interesting, please give the repository a star. Thanks for reading!